n8n google search leads

Scraping the top websites for search terms can serve many purposes. Some companies may give this to sales members to reach out to potential clients, while others may use it to track SEO competitors.

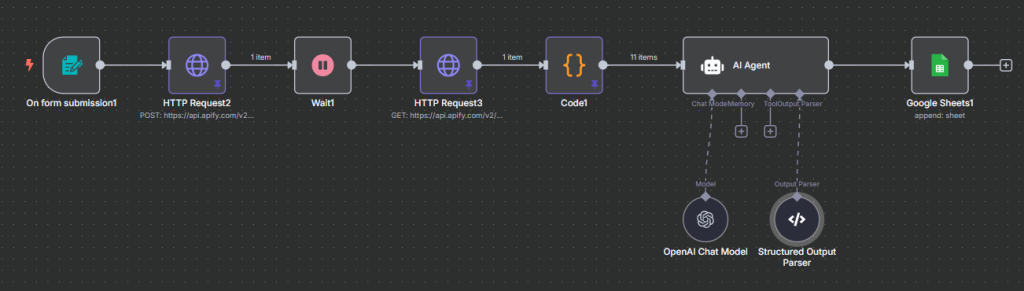

In this N8N lesson, I’ll show you how to scrape the Google Search Engine Results Page (SERP) with the help of N8N. The goal is to get the top ranking sites and then classify what business type they are in with the help of an AI Agent.

The schematic of the workflow is down below. Also if you need any help with N8N workflows or Data needs, I am taking on Freelance Customers. Reach out!

If you are brand new to N8N you can sign up here

Here is the video of the walkthrough of this example. Feel free to watch it instead of reading the article.

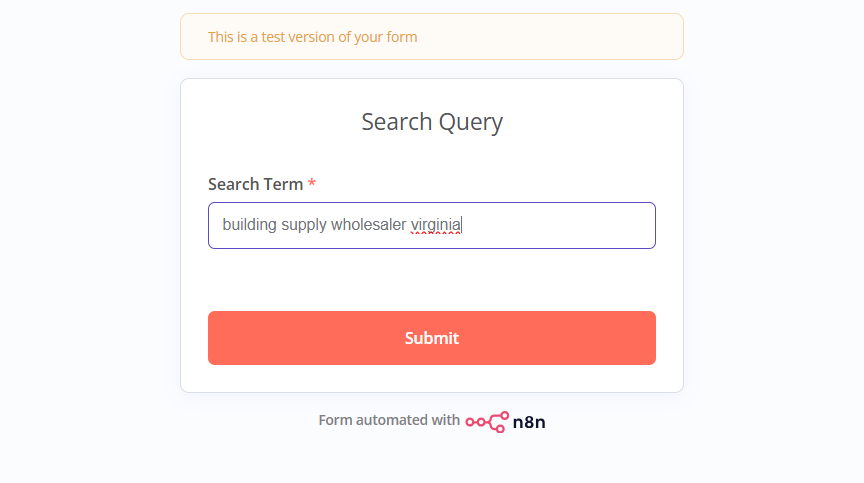

Form Submission

To start we’re going to have a sales rep enter in a search term. We can build a full workflow from a for trigger

Apify

To scrape the Google SERP, we will want to leverage Apify. They have a ton of actors ready to go that integrate nicely within N8N. You can get this Apify Actor here.

To implent this in our workflow, there will be 3 different nodes. Two of them are HTTP requests. We will want to Post Data to Apify, Wait, and then Get our data.

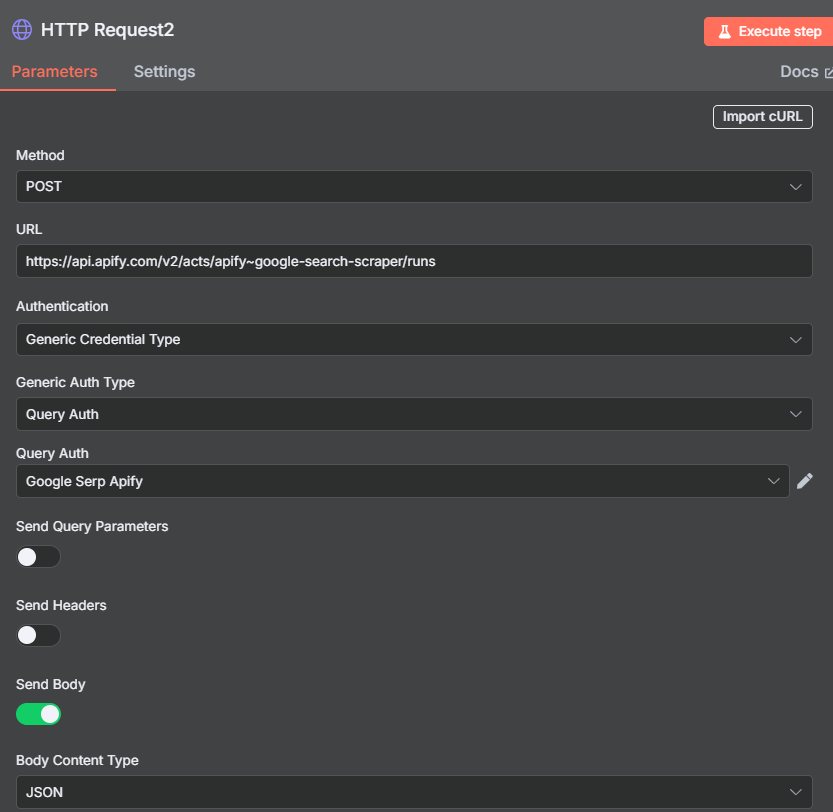

HTTP Request - Post

We have to post to Apify so that they can get our data ready.

{

"queries": "{{ $json["Search Term"] }}",

"resultsPerPage": 10

}

Wait

I just waited 20 seconds between the post and get. If you don’t set a delay, sometimes the data won’t be ready.

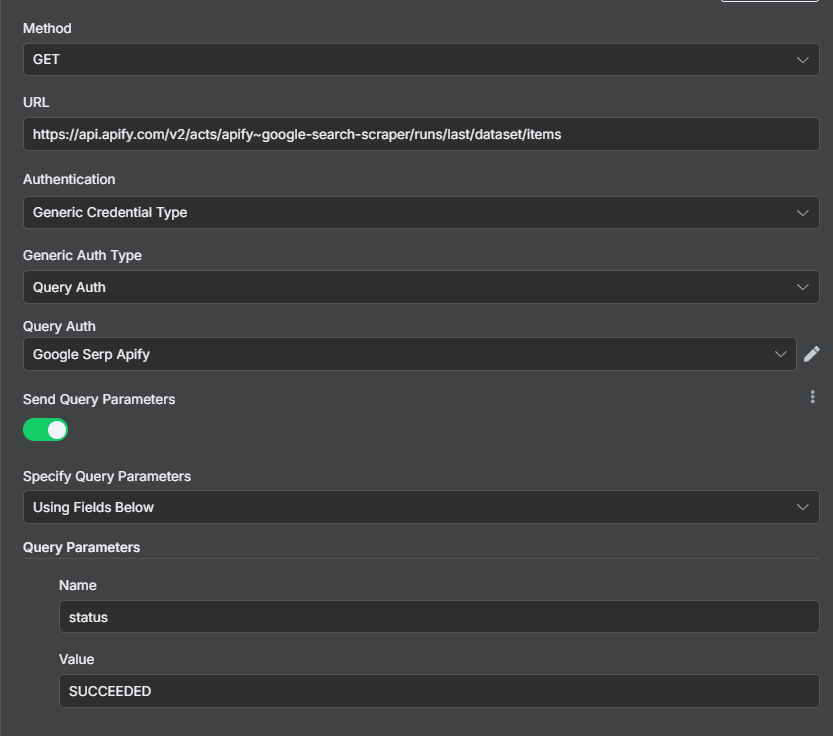

HTTP Request - Get

Get will grab our data now that it is ready.

Code

We then will use this python code to get our results ready for the AI Agent.

# n8n provides 'items' as input

search_term = items[0]['json']['searchQuery']['term']

organic_results = items[0]['json']['organicResults']

output = []

for result in organic_results:

output.append({

'Title': result.get('title', ''),

'URL': result.get('url', ''),

'Description': result.get('description', ''),

# 'EmphasizedKeywords': ', '.join(result.get('emphasizedKeywords', [])),

'search_term': search_term

})

return [{'json': row} for row in output]

Ai Agent

I added in the AI Agent to help us classify what type of business the websites we scrape are.

Below is the prompt I used within the Ai Agent

Based on the Title, Url, Description, and search term write a 1-5 word classification on the business.

If you have information about the website already based on the URL or business title use that in your classification

Title: {{ $json.Title }}

URL: {{ $json.URL }}

Description: {{ $json.Description }}

Search Term: {{ $json.search_term }}

OpenAI Chat Model

The model I used for this was: gpt-4o-mini

Structured Output Parser

The following JSON was used for the Structured Output Parser

{

"Title":

"Virginia Building Supply Store | Metal Panels And More",

"URL":

"https://buildingsupplyva.com/",

"Description":

"Virginia Building Supply, situated in Meadows of Dan, Virginia, is the leading supplier of premium metal roofing and siding manufactured by Mod Metal, as well ...",

"search_term":

"building supply wholesaler virginia",

"Business Classification": "building supply wholesaler"

}

Google Sheets

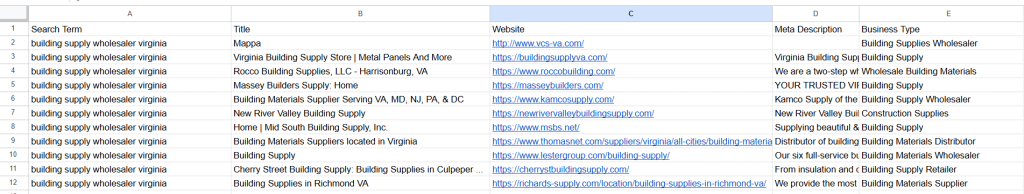

The results are then going to be pasted into a google sheet. We will throw in the search term, title, website, meta description, and business type.

Outro

Just like that, you now have a workflow that scrapers the Google Serp results.

If you found this helpful, feel free to share it on LinkedIn and if you need any help with N8N or data work, I’m available for freelance work.