N8N Ai Agent

Using AI Agents is critical if you want to build out extensive workflows that can get tasks done. In this lesson we will go over the AI Agent and all its components.

If you would rather watch a video instead of reading an article, our YouTube video is linked down below.

Also if you need help with any Data or N8N needs, I’m taking on customers!

If you are brand new to N8N you can sign up here

When Chat Message Received

The first part of the tutorial deals with using Chat Message received for a chat interaction. You can either have this feed directly into the AI Agent or have an AI agent in the middle of a workflow.

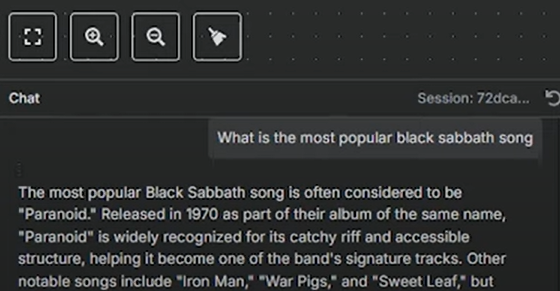

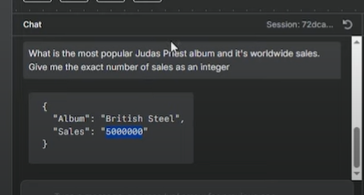

Below you’ll see the chat interface within N8N. This is easier to see in real time from the video above.

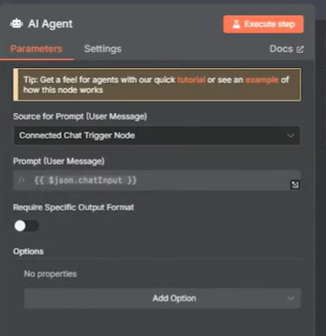

Inside the AI Agent, you will not have to define a prompt when using a chat trigger. The chat will be the prompt you are using.

Chat Model

Your chat model is the LLM you plan on using with the AI Agent.

In the video we use GPT-4o from OpenAI, but you can use whatever you like. Grok, Gemini, Claude, or others. There are also options to use Open Router which allows you to switch models easily

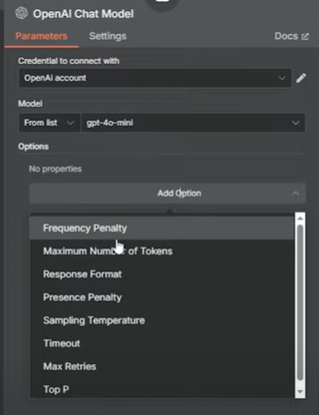

There are a ton of different options that you can tweak within your chat model. Here are the settings below.

Frequency Penalty

The frequency penalty discourages the same phrases. Typically it’s set to 0 – 2. Negative values repeat

Maximum Number of Tokens

Max Number of Tokens Max length of model response. Each model has their own way of calculating token costs and have specific limits, so check the documentation.

Response Format

This has the option for text or JSON. I ignore this use an output parser instead

Presence Penalty

The presence penalty is a penalty assigned for repeating a word.

Sampling Temperature

Randomness in the output Typically known as temperature 0 to 1

Timeout

How long system waits for a response in milliseconds

Max Retries

max retries how mny time to retry failed request before giving up

Top P

Use Temperature or Top P – diversity vs nucleus. Preference is to use temperature.

Memory

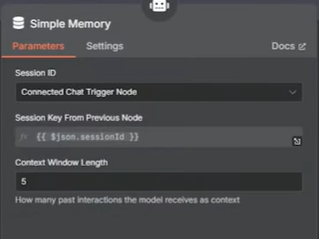

Memory will allow you to look back over at prior messages within a conversation.

The context window length is how many past interactions the model should use as context.

Tool

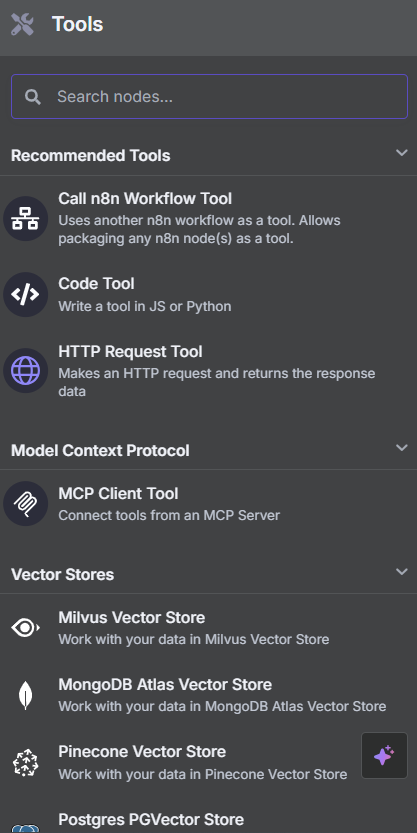

You can use a tool to attach to your AI agent.

N8N has hundreds of tools to choose from including building your own custom tools if needed with Python or Javascript.

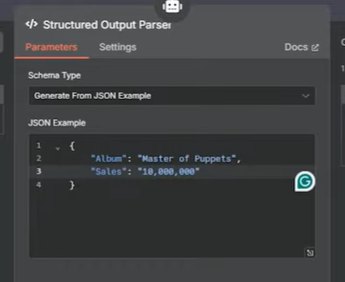

Output Parser

The output parser defines how your output will look like. This is done through JSON and has a ton of usecases within N8N.

Ai Agent in a Workflow (Not Chat Message Receieved)

If you aren’t using chat, you have to define your prompt within the AI Agent as shown below.

Outro

That’s it for the basics of AI Agents in N8N. If you need help with any Data or N8N needs, I’m taking on customers!

Ryan is a Data Scientist at a fintech company, where he focuses on fraud prevention in underwriting and risk. Before that, he worked as a Data Analyst at a tax software company. He holds a degree in Electrical Engineering from UCF.