BeautifulSoup4 find vs find_all

import requests

from bs4 import BeautifulSoup

import pandas as pd

import re

html = """

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Ultra Running Events</title>

</head>

<body class="site-body">

<header class="site-header">

<h1 class="site-title">Ultra Running Events</h1>

<nav class="main-nav">

<ul class="nav-list">

<li><a class="nav-link" href="#races-50">50 Mile Races</a></li>

<li><a class="nav-link" href="#races-100">100 Mile Races</a></li>

</ul>

</nav>

</header>

<section id="races-50" class="race-section race-50">

<h2 class="section-title-50">50 Mile Races</h2>

<ul class="race-list-50">

<li class="race-item">

<h3 class="race-name"><a href="https://www.ryandataraces.com/rocky-mountain-50">Rocky Mountain 50</a></h3>

<p class="race-date highlighted">Date: August 10, 2025</p>

<p class="race-location">Location: Boulder, Colorado</p>

</li>

<li class="race-item">

<h3 class="race-name"><a href="https://www.ryandataraces.com/desert-dash-50">Desert Dash 50</a></h3>

<p class="race-date">Date: September 14, 2025</p>

<p class="race-location">Location: Moab, Utah</p>

</li>

</ul>

</section>

<section id="races-100" class="race-section race-100">

<h2 class="section-title-100">100 Mile Races</h2>

<ul class="race-list-100">

<li class="race-item">

<h3 class="race-name"><a href="https://www.ryandataraces.com/mountain-madness-100">Mountain Madness 100</a></h3>

<p class="race-date">Date: July 5, 2025</p>

<p class="race-location">Location: Lake Tahoe, California</p>

</li>

<li class="race-item">

<h3 class="race-name"><a href="https://www.ryandataraces.com/endurance-beast-100">Endurance Beast 100</a></h3>

<p class="race-date">Date: October 3, 2025</p>

<p class="race-location">Location: Asheville, North Carolina</p>

</li>

</ul>

</section>

<footer class="site-footer">

<p>© 2025 Ultra Running Events</p>

</footer>

</body>

</html>

"""

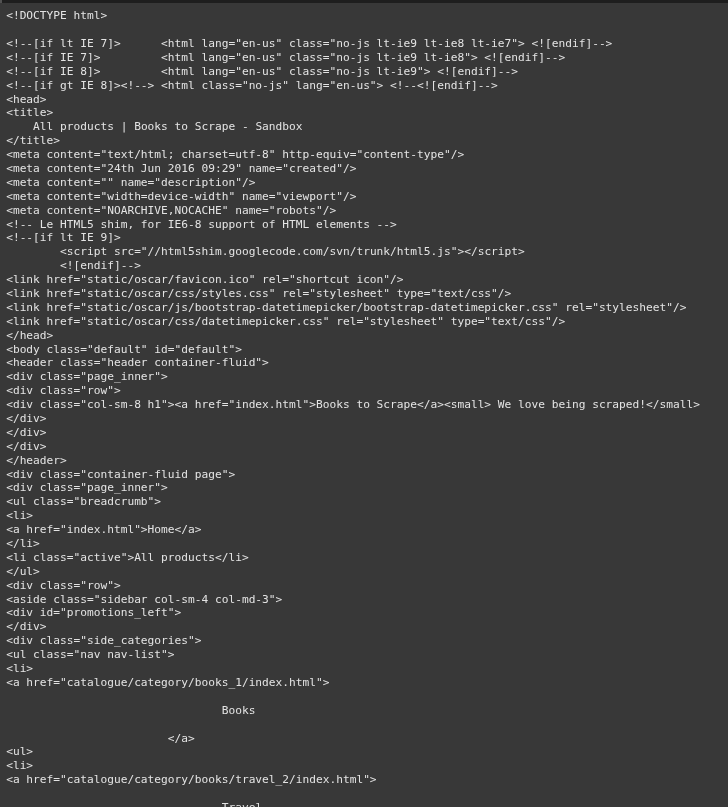

URL = 'https://books.toscrape.com/'

Part 1 Parsing the HTML From your first page. snapshot of html at that time

response = requests.get(URL)

if response.status_code == 200:

print("Page fetched successfully!")

else:

print("Failed to retrieve page:", response.status_code)

soup = BeautifulSoup(response.text, 'html.parser')

soup_html = BeautifulSoup(html, 'html.parser')

soup

Example 3 use soup.prettify()

print(soup.prettify())

Example 4 - Grab Page title

soup_html.title

Example 5 - Grab Page H2 (This only grabs the first one...)

soup_html.h2

Example 6 - Grab Page title text

soup_html.title.get_text()

soup_html.h2['class']

#Next two examples take a look at find vs find all

#| Method | Returns | Use When |

#| ———— | —————————— | ————————————— |

#| `find()` | The **first** matching element | You want a single element |

#| `find_all()` | A **list** of all matches | You want to loop through multiple items |

Example 8 Find

find() only returns the first match — it doesn’t let you directly access the second, third, etc.

soup_html.find('h2')

Example 10 Find with Class

soup_html.find('h2', class_ = 'section-title-50').get_text()

soup_html.find('h2', class_ = 'section-title-100').get_text()

Example 11 Find Chain Requests

#Finds the first <li> (list item) element in the document

#From that <li> element, it then finds the first <a> (anchor) tag inside that <li>.

soup_html.find('li').find('a')

Example 12 Seperating out Chain Requests

list_item = soup_html.find('li')

list_item_a = list_item.find('a')

list_item_a

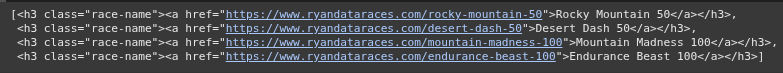

#Example 13 Find All Races

soup_html.find_all('h2')

Example 14 Find First or 2second race

soup_html.find_all('h2')[0]

soup_html.find_all('h2')[0].get_text()

soup_html.find_all('h2')[1]

soup_html.find_all('h2')[1].get_text()

Example 15 final all and print out the text

race_types = soup_html.find_all('h2')

for race in race_types:

print(race.get_text())

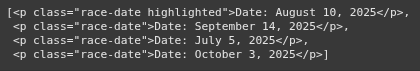

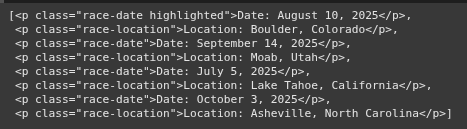

Example 16 find all with a class race dates

soup_html.find_all('p', class_ = 'race-date')

Example 17 find all with Either class

soup_html.find_all("p", class_=["race-date", "race-location"])

Example 18 Find OR Attricbutes href, title, id, class, src, alt, type

soup_html.find_all("p", attrs={"class": ["race-date", "race-location"]})

soup_html.find_all("a", attrs={"href": ["#races-50", "#races-100"]})

#Example 19 Search for Strings

soup_html.find_all("a", string='Mountain Madness 100')

#Example 20 Search for Strings with regex

soup_html.find_all("a", string=re.compile('Madness'))

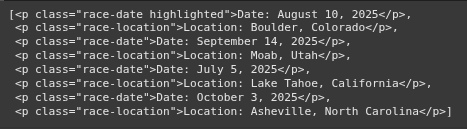

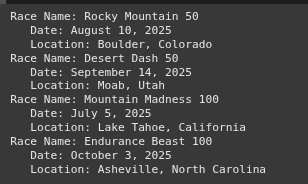

Example 21 Parent Sibling Child

h3_races = soup_html.find_all("h3")

h3_races

for h3 in h3_races:

print("Race Name:", h3.get_text())

# Get next siblings that are <p> tags

for sibling in h3.find_next_siblings('p'):

print(" ", sibling.get_text())

working with a real site now instead of basic html.

https://books.toscrape.com/

print(soup.prettify())

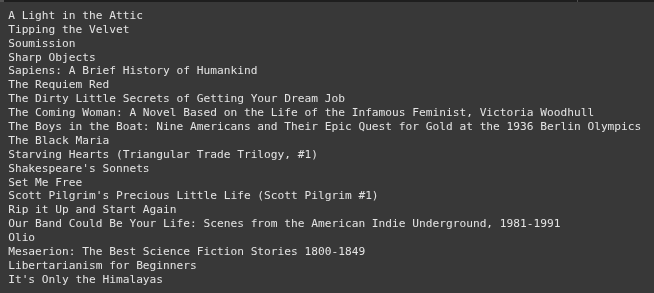

Example 22 Find all books on a page and print them out

#searches for all <article> elements in the HTML that have the class "product_pod".

books = soup.find_all("article", class_="product_pod")

#.h3: accesses the <h3> tag inside the article.

#.a: accesses the <a> tag inside the <h3>, which contains the link to the book's detail page.

#["title"]: extracts the title attribute of the <a> tag, which holds the title of the book.

for book in books:

print(book.h3.a["title"])

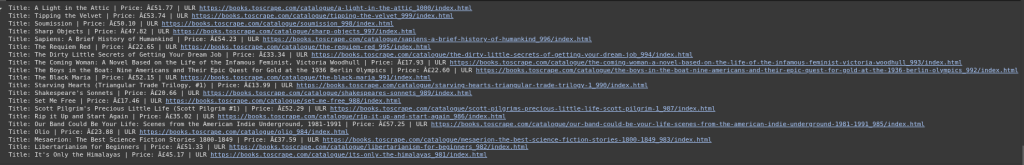

Example 23 Grab multiple things at once

for book in books:

title = book.h3.a['title']

price = book.find('p', class_='price_color').get_text()

relative_url = book.h3.a['href']

book_url = URL + relative_url

print(f"Title: {title} | Price: {price} | ULR {book_url}")

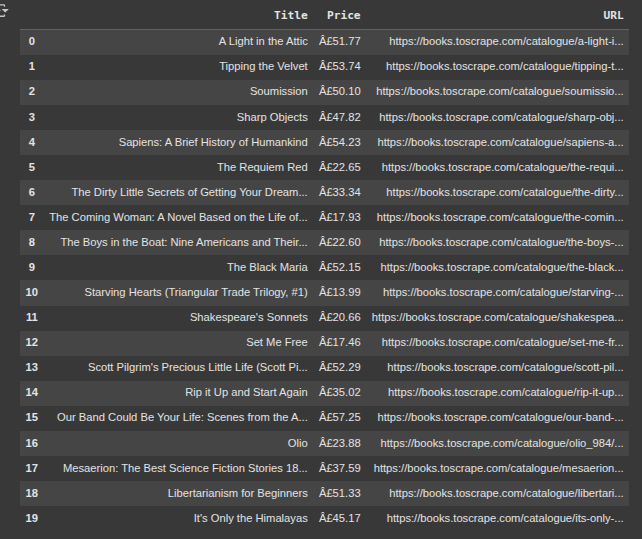

Example 24 Save Multiple Things to a Dataframe

data = []

for book in books:

title = book.h3.a['title']

price = book.find('p', class_='price_color').text

relative_url = book.h3.a['href']

book_url = URL + relative_url

data.append({

'Title': title,

'Price': price,

'URL': book_url

})

df = pd.DataFrame(data)

df

Example 25 Clean Data Frame Price Colum

df['price_clean'] = df['Price'].str.replace('£', '', regex=False).astype(float)

#Convert GBP to USD (example rate: 1 GBP = 1.0737 USD) (CHECK THIS)

exchange_rate = 1.0737

df['price_usd'] = df['price_clean'] * exchange_rate

df_final = df[['Title', 'Price_usd', 'URL']]

df_final

Example 26 Export as a CSV File

df_final.to_csv('scrapped_book_data.csv')

df_final.to_excel('scrapped_book_data.xlsx')

Ryan is a Data Scientist at a fintech company, where he focuses on fraud prevention in underwriting and risk. Before that, he worked as a Data Analyst at a tax software company. He holds a degree in Electrical Engineering from UCF.